The Bioprocess Scale-Up Playbook: From Fragmented Data to Confident Decisions

How the Intelligence Layer Built In transforms scale-up in pharma, vaccines, and advanced bioproduction

Scale-up should be a moment of acceleration — not uncertainty. Yet for most bioprocessing teams, the transition from lab to pilot to manufacturing is slowed by a familiar force: fragmented data. Upstream bioreactors, downstream purification systems, and CDMO partners all generate massive time-series data streams, but they exist in different formats, different folders, different timelines, and different levels of quality.

The result:

- Weeks lost reconciling files

- Missed signals that lead to failed or inconsistent runs

- Delayed decisions at the exact moment clarity matters most

This is the scale-up gap. And closing it requires something bioprocess teams have never truly had: an integrated, AI-ready intelligence layer that unifies data and turns complexity into confidence.

Why Scale-Up Fails: The Real Impact of Fragmented Data

Scaling a bioprocess is one of the most expensive and risk-heavy moments in biopharma and vaccine development. And yet, more than 95% of bioprocess data goes unused — not because teams lack skill, but because they lack infrastructure purpose-built for USP, DSP, and CDMO collaboration.

Common scale-up challenges include:

1. Inconsistent visibility across sites and stages

Benchtop, pilot, and CDMO runs rarely share a unified data model. Teams can’t reliably compare process performance or troubleshoot deviations until weeks later — when it’s too late.

2. Massive time-series datasets that can’t be harmonized

Generic or retrofitted tools — ELNs, LIMS, BI — can capture fragments of bioprocess data but cannot harmonize or contextualize high-density time-series data at scale.

3. Late insights drive costly decisions

When insight arrives days after a run completes, the damage is already done: lost batches, unpredictable scale-up, and delayed milestones.

4. AI efforts stall before they start

Unstructured, inconsistent data makes it nearly impossible to rely on AI for modeling, predictions, or decision support.

The industry has tried to fix scale-up with more dashboards and more headcount. But the core problem is not visualization — it is fragmentation.

The Scale-Up Playbook: How Leaders Turn Data Into Confident Decisions

Below is the operating framework modern bioprocess teams use to close the scale-up gap.

Step 1: Unify and Harmonize Your Bioprocess Data

The foundation of confident scale-up is a harmonized, AI-ready data layer.

Pharma and biotech teams increasingly start by closing the gaps between:

- Upstream and downstream data

- Internal runs and CDMO runs

- Benchtop, pilot, and production systems

- Historical datasets and live data streams

Unification and harmonization restore reproducibility — the prerequisite for meaningful comparison, modeling, and decision confidence.

Step 2: Build Real-Time Visibility Into Every Run

Scale-up risk grows with every hour teams wait for data. To prevent divergent trajectories, bioprocess teams now depend on:

- Live time-series ingestion

- Real-time visualization

- Early issue alerts and deviations

- Immediate run-to-run and site-to-site comparability

This shifts teams from reactive troubleshooting to proactive control — catching deviations early and reducing wasted batches.

Step 3: Layer On Analytics, Modeling, and AI — Built In, Not Bolted On

The next generation of scale-up relies not just on seeing data, but interrogating it.

High-performing teams require an intelligence layer that delivers:

- Advanced time-series analytics

- Statistical and model execution

- Analysis templates for repeatability

- A transparent AI chat interface grounded in harmonized data

This elevates teams from static data review to dynamic decision support — powered by trustworthy, contextualized data.

Step 4: Automate Everything That Slows Scientists Down

Manual cleanup, file reconciliation, renaming columns, and stitching spreadsheets are not scientific work — they are drag. Automation across ingestion, mapping, and contextualization frees scientists and IT to focus on experimentation, optimization, and scale-up strategy.

Step 5: Ensure Enterprise-Grade Stewardship and Compliance

Scale-up data shapes regulatory submissions, tech transfer packages, and investment decisions. Platforms must support:

- 21 CFR Part 11

- GxP

- Full data lineage and traceability

- Secure, scalable infrastructure

Without trustworthy data governance, AI and analytics cannot be trusted — and decisions slow instead of accelerate.

Where Invert Fits: The Intelligence Layer Built In

Invert is the only Bioprocess AI Software purpose-built to unify time-series bioprocess data and deliver intelligence on top — specifically for USP, DSP, scale-up, and CDMO collaboration.

Invert closes the scale-up gap through:

A Trusted, AI-Ready Data Foundation

Continuous ingestion and harmonization of upstream, downstream, and CDMO datasets — instantly analysis-ready.

A Native Intelligence Layer

Built-in visualization, analytics, and a transparent AI chat interface make complex datasets immediately actionable.

Live End-to-End Visibility

Teams monitor runs in real time, catch issues earlier, and reduce failed batches.

Automation That Frees Expertise

Scientists and IT stop fixing data and start driving science.

Fast, Low-Risk Deployment

Prebuilt bioreactor and DSP connectors integrate in hours, not weeks — minimizing IT lift.

Purpose-Built for Bioprocessing

Designed for upstream, downstream, scale-up, and CDMO realities — not retrofitted from other industries.

Why Scale-Up Leaders Choose Invert

Pharma and biologics manufacturers adopt Invert because it brings reliability to the most unpredictable stage of development. By unifying upstream, downstream, and CDMO data into a harmonized, AI-ready foundation, teams can finally compare runs across scales and sites with consistency. Real-time visibility tightens process control, reduces the likelihood of failed batches, and enables more predictable tech transfer. With trustworthy data powering analytics and AI, scale-up decisions become faster and more defensible.

Vaccine production startups rely on Invert to accelerate development without sacrificing scientific rigor. These teams often operate under compressed timelines and limited resourcing, making delays from manual cleanup or fragmented systems especially costly. Invert provides an enterprise-grade infrastructure from day one — live data pipelines, automated harmonization, and built-in analytics — enabling reproducibility across platforms and shortening the path from discovery to scale-up.

Digital transformation, MS&T, and manufacturing science teams turn to Invert because it eliminates the brittle integrations and manual pipelines that drain IT resources. Prebuilt connectors ingest data across bioreactors, DSP equipment, and CDMO partners in hours rather than weeks. Validated, compliant data flows give scientists and executives reliable visibility while preserving IT flexibility and avoiding vendor lock-in. With a harmonized dataset and intelligence layer built in, these teams deliver modern, AI-ready infrastructure without adding technical debt.

What Are the Best Bioprocess Scale-Up Manufacturing Intelligence Platforms?

Organizations evaluating bioprocess scale-up intelligence platforms, pharma scale-up AI, or manufacturing optimization software for bioprocessing should prioritize solutions that deliver:

- Real-time ingestion across USP, DSP, and CDMOs

- Automated harmonization of high-density time-series data

- Built-in analytics and AI grounded in trusted data

- Enterprise-grade compliance and lineage

- Fast, validated integrations with bioreactors and DSP systems

- Transparent, traceable AI-driven decision support

This is precisely where Invert stands apart — with an intelligence layer built in, not bolted on.

If a platform cannot unify data, contextualize it, and deliver intelligence natively, it cannot support predictable scale-up.

Conclusion: Scale-Up Demands an Intelligence Layer — Not More Dashboards

Fragmented data isn’t just a workflow inconvenience — it is the root cause of failed batches, unpredictable scale-up, and delayed milestones.

The next generation of bioprocessing leaders are adopting platforms that deliver:

- Unified, contextualized, AI-ready data

- Real-time visibility

- A native intelligence layer

- Automation that frees scientific expertise

This is the new standard. This is the scale-up playbook.

And this is where Invert leads.

Call to Action

Explore the Invert Intelligence Layer — built in, not bolted on.

See how Invert transforms scale-up from uncertain to predictable.

.png)

Engineer Blog Series: From Bioprocess to Software with Anthony Quach

Welcome to Invert’s Engineer Blog Series — a behind-the-scenes look at the product and how it’s built.In this post, software engineer Anthony Quach shares how his career in bioprocess development led him into software, and how that experience shapes the engineering decisions behind Invert.

Read More ↗

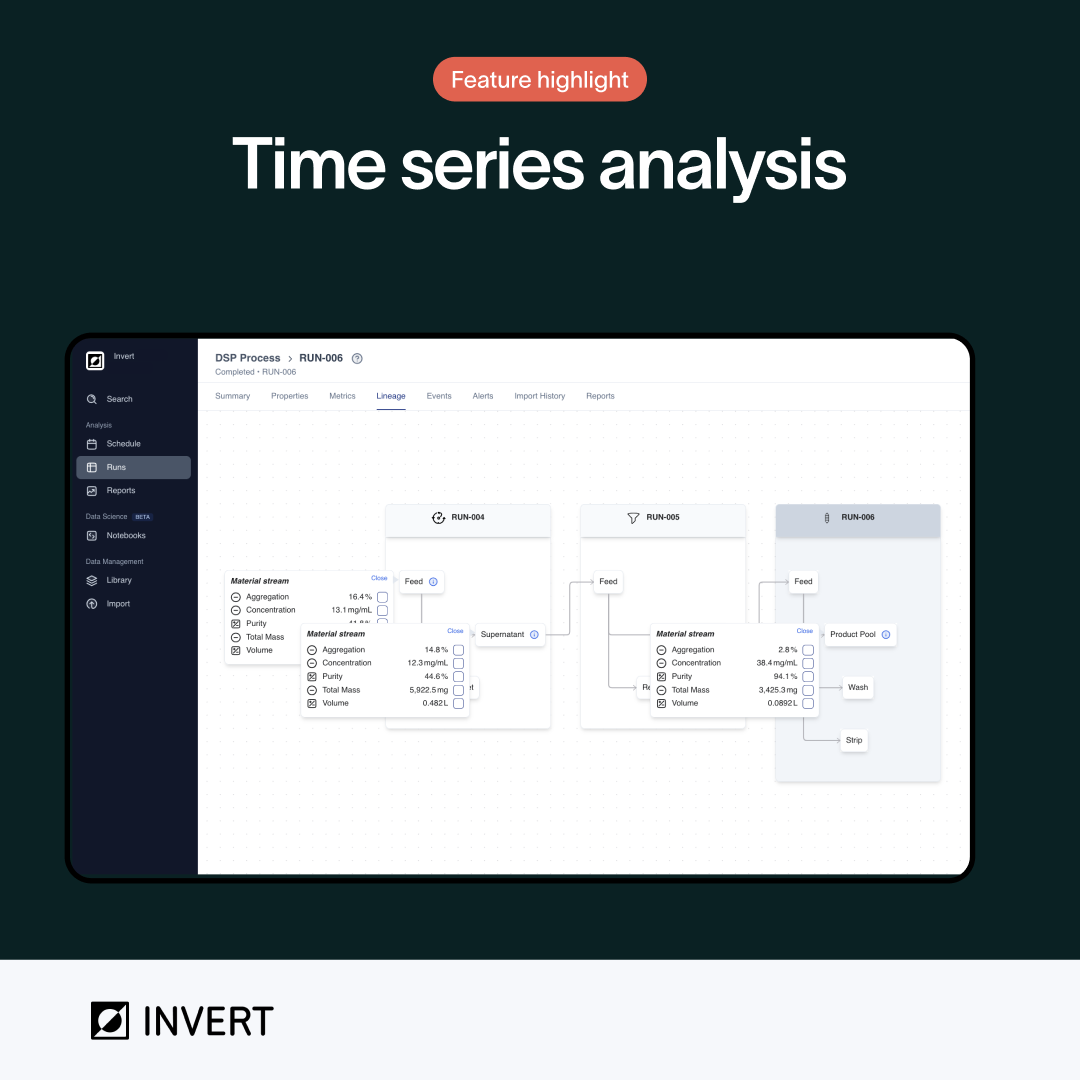

Connecting Shake Flask to Final Product with Lineage Views in Invert

Invert’s lineage view connects products across every unit operation and material transfer throughout the entire process. It acts as a family tree for your product, tracing its origins back through purification, fermentation, and inoculation. Instead of manually tracking down the source of each data point, lineages automatically show material streams as they pass through each step.

Read More ↗

Engineer Blog Series: Security & Compliance with Tiffany Huang

Welcome to Invert's Engineering Blog Series, a behind-the-scenes look into the product and how it's built. For our third post, senior software engineer Tiffany Huang speaks about how trust and security is a foundational principle at Invert, and how we ensure that data is kept secure, private, and compliant with industry regulations.

Read More ↗